Given the time constraints of the project (110 man hrs), the scope, and the available resources it was not possible to design a real time tracking system. We have implemented a Checkers Assistent which follows the game flow and gives suggestions for the next possible step. A frame is taken once every 2 secs, which is then processed, the actual game state is detected and passed to the checkers engine which replies with the next best step.

back to top

A standard 1.3 mega pixel web camera was used to capture the images. This equipment was mounted in a tripod modified in order to capture images from the top of the board.

Figure 1. From Left to right: The Web cam, The acquisition table and a team member.

back to top

When the game starts, a reference frame is taken from the beginnig state of the board.

After in 2 seconds a new frame is grabbed. This new frame is compared pixel-by-pixel

to the reference frame. If the difference is bigger then a given threshold, it means that a

hand is over the gameboard, and another frame needed to be grabbed. Otherwise, the board state

can be calculated and the latest frame become the reference frame.

back to top

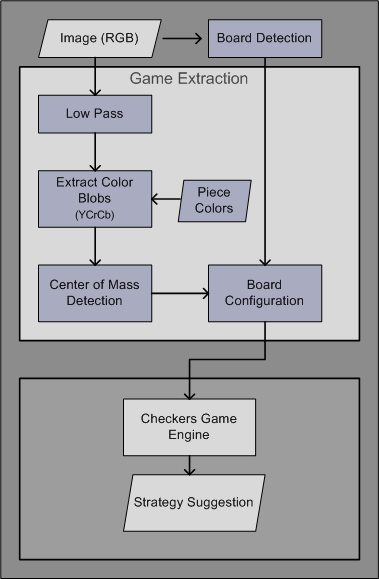

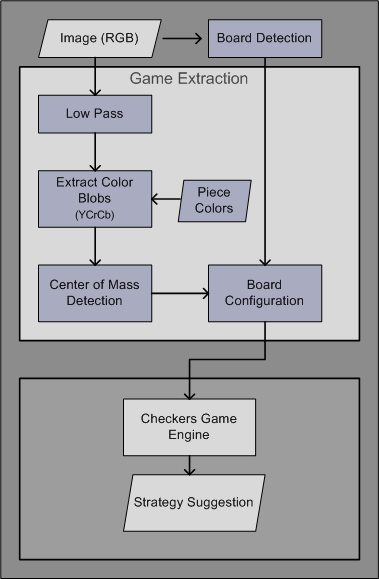

The game extraction phase is a module that receives an image and returns the current status of the board in the format required for the game engine to propose the next movement.

This module is divided in two different stages: Piece detection and Board Detection, the first one is responsible for detecting the position of the pieces in the scene, and the second one is responsible for detecting the position and the dimensions of the board as well as the configuration of the grid.

back to top

This module performs a color based segmentation aiming at detecting the different pieces by their colors. One of the limitations of the method is that special kind of pieces (Kings) which are usually the same color cannot be succesfully identified unless they use a different color, therefore we have used four different colors to detect the pieces Red, Green, Blue, and White.

The first step before segmenting the image is applying a standard low pass filter, in this case a gaussian filter in order to remove small instances of noise. The next step is to apply a basic color threshold segmentation in the

YCrCb domain.

Figure 2. Extracted Blobs before structured noise removal.

After the segmentation the next step is to remove de-noise the binary images, give the characteristics of the resulting images, it was decided to use morfological Closing with a round 9x9 structuring element. In the case of the white piece segmentation, we obtained big amount of clearly directional noise from the squares of the board, in order to elliminate it an extra morfological de-noising step was applied, an erosion with a cross shaped structuring element solved the problem.

Figure 3. Blobs after de-noising. Note: The noise at the boarders of the board was removed using a ROI resulting from the board detection.

After we managed to obtain noiseless binary blobs corresponding the pieces, we located their center of mass and combining this information with the configuration of the board we could estimate quite accurately the position of the pieces in the 8x8 board.

Figure 4. Game extraction diagram.

back to top

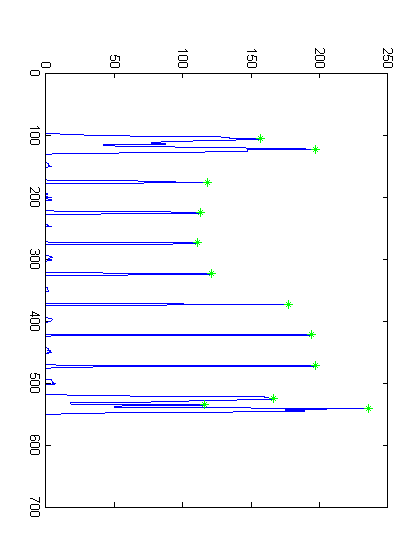

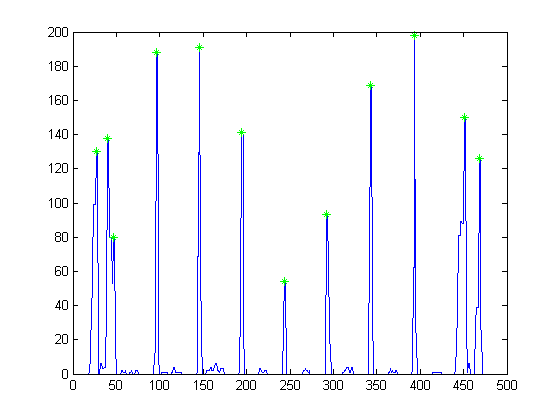

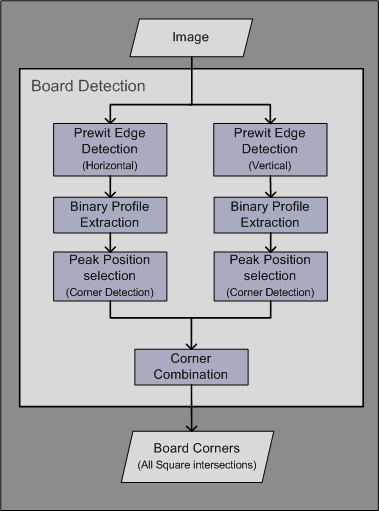

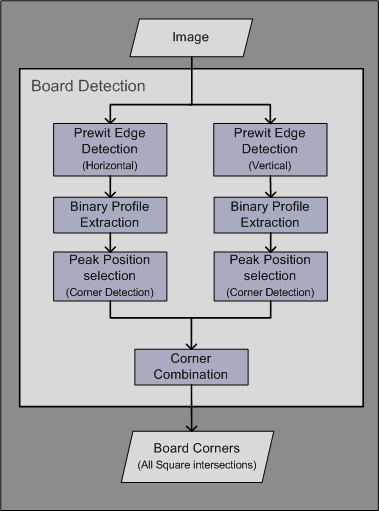

This step is an important requirement for the piece detection. Its purpose is to detect the boundaries and all the corners in the keyboard. Taking in mind that we assume that our images are more or less aligned with the camera, we decided to extract the horizontal and vertical profiles of the images, wich would give us the approximate location of the corners of the board.

The first step was to independently apply a directional (Horizontal and Vertical) high pass filter to obtain only those profiles in such direction, in a second step, the values of all the pixels in that direction were added as in the following figure.

Figure 5. Horizontal and vertical profiles. (Click for larger View)

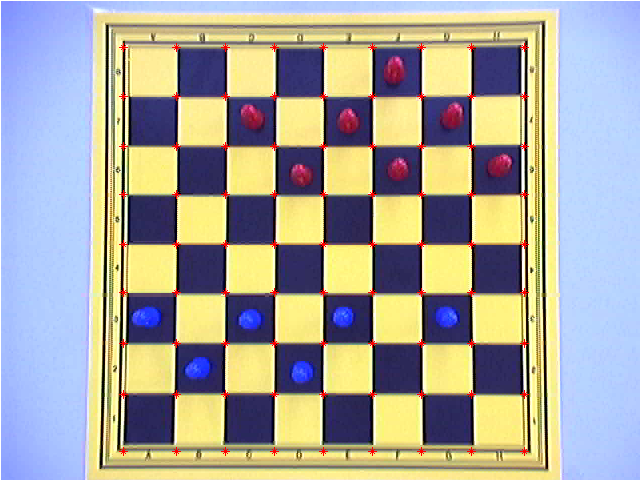

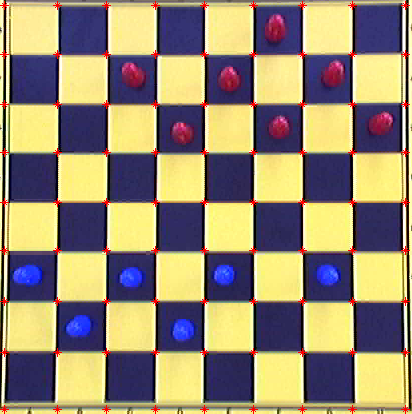

Once we could extract the profile information, we extracted the position of all local maxima, and selected those whose distance is closest to the median of all distances between the local maxima. After completing this step in both directions (horizontal and Vertical) we could obtain the location of all the corners in the board.

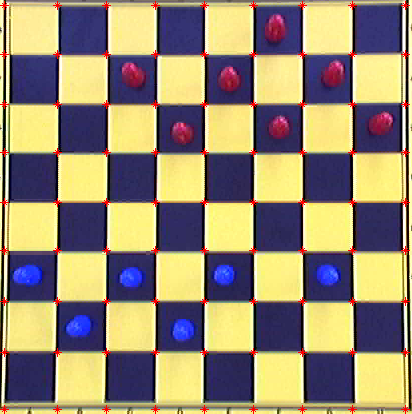

Figure 6. Note the corners (Marked in red) in the board.

Figure 3. From Left to right: The Web cam and The acquisition table

back to top

In order to find a relationship between the location of the blobs in the image and the position of the pieces in the board, it was necessary to find the average distance between the lines of the board (Board Extraction Step) and normalize the position of the blobs (their mass centers) to an 8x8 board where the square size is given. The following formula was applied:

Ppxy = Pixy / Ds

Where Ppxy and Pixy are vectors of the form (X, Y) denoting X and Y coordinates and and Ds the average distance between the corners. Note that since we are using a ROI based on the board detection, it is not necessary to shift Pixy to the left and to the top.

Passing this step, it was possible to create an 8x8 matrix containing the configuration of the board and the pieces in the format requiered by the

game engine.

back to top

For the movement suggestion, one of the game engines is used from the Checkers Gameboard webpage. The engine is in .dll format therefore a c++ wrapper application needed to be written. This application is called from within the matlab, with two kind of paramaters. The parameters are passed as command argument. One parameter is the color, which is going to make the next step. The second parameter is the current board state. The board state is represented by a 8x8 matrix., each cell is containing wether it is empty, free(not used) or the type of the piece (man/king and white/black).

The suggested move is written to the stdout by the wrapper application and parsed by the matlab function.

back to top

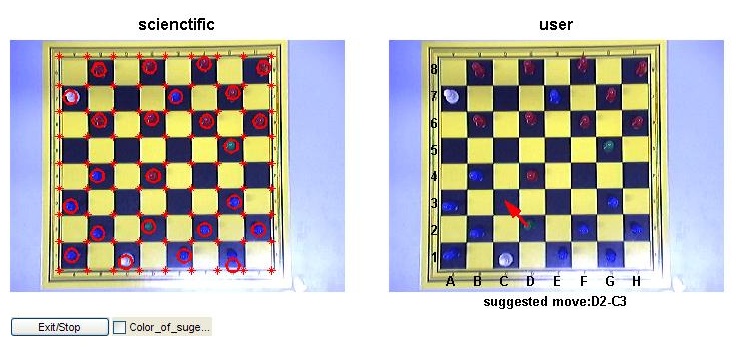

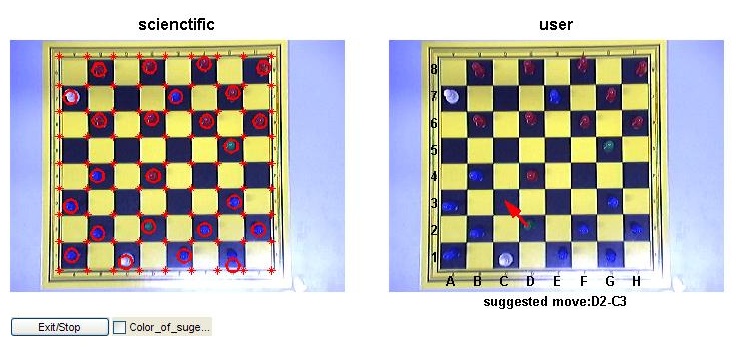

The results are visualized in a graphical window. The window is split into two, in the left side the results of the detection steps are shown. In the right side, the hints for the players are shown. The suggested next step is indicated by an arrow.

back to top